With this information, you’ll have a great foundation with which to follow this feature.

We’ve picked this timeframe because it marks the start of when GPUs had a unified shader structure.

However, you could have two chips that are the same size, but have vastly differing transistor counts.

Perhaps the most contentious metric we’ll be using is the figure for the GPU’s power consumption.

It is possible to directly measure the power consumption of a graphics card.

We’re going to directly compare 2 metrics: GFLOPS and unit die density.

The same is true for they-axis as this plots GFLOPS per unit die density.

The more transistors you have packed into a square mm, the more performance you would expect.

Which is what you would expect!

But there are some interesting data points.

The first of which are the TU102 and GV100.

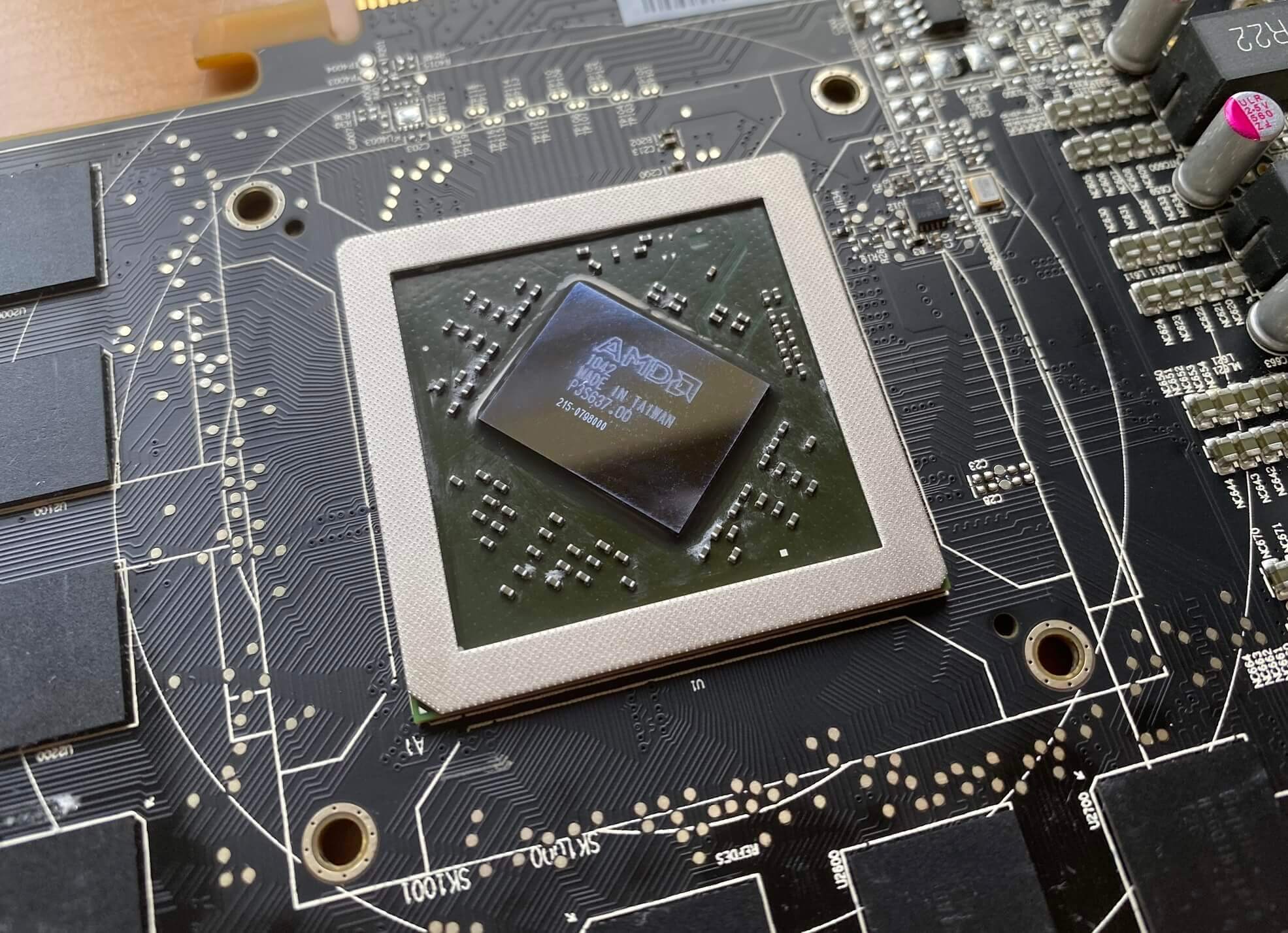

Across from the GP108 is AMD’s Fuji chip that powered its Radeon R9 Fury series.

The Fiji design ran rather hot, which makes semiconductor processors less power efficient due to increased leakage.

All chips leak, but the rate of loss increases with temperature.

So, what’s going on here?

It packs in a huge number of unified shader cores into a layout that focuses heavily on FP32 throughput.

However, programming the machine to achieve this performance was not easily done and it lacked flexibility.

If we ignore the outliers, the most efficient GPUs in our chart are the GP102 and GP104.

This gives us a very different picture…

In other words, they’ve wanted to get more GPU chips permanufactured wafer.

So will AMD and Nvidia continue this way with their next GPUs?

But what about Nvidia?

So How Have GPUs Become More Efficient?

In some cases, the increase has been astonishing…

Take Nvidia’s G92 and TU102 processors.

When it appeared in October 2007, it was well received for its performance and power requirements.

Speaking of clocks, this is another factor to consider.

The capability doesn’t impact our efficiency results though, as we’ve only looked atpeakprocessing output (i.e.

at the maximum clock speeds), but it does affect how the card performs for the general consumer.

The units are connected to the cache and local memory with high speed, wide interconnects.

These changes certainly help in processing 3D graphics, but it would be considered overkill for most games.

Nvidia’s CUDA, AMD’s ROMc, OpenCL) as they involve lots of complex, massively-parallel calculations.

And that’s good news, no matter who’s making it or what it’s being used for.