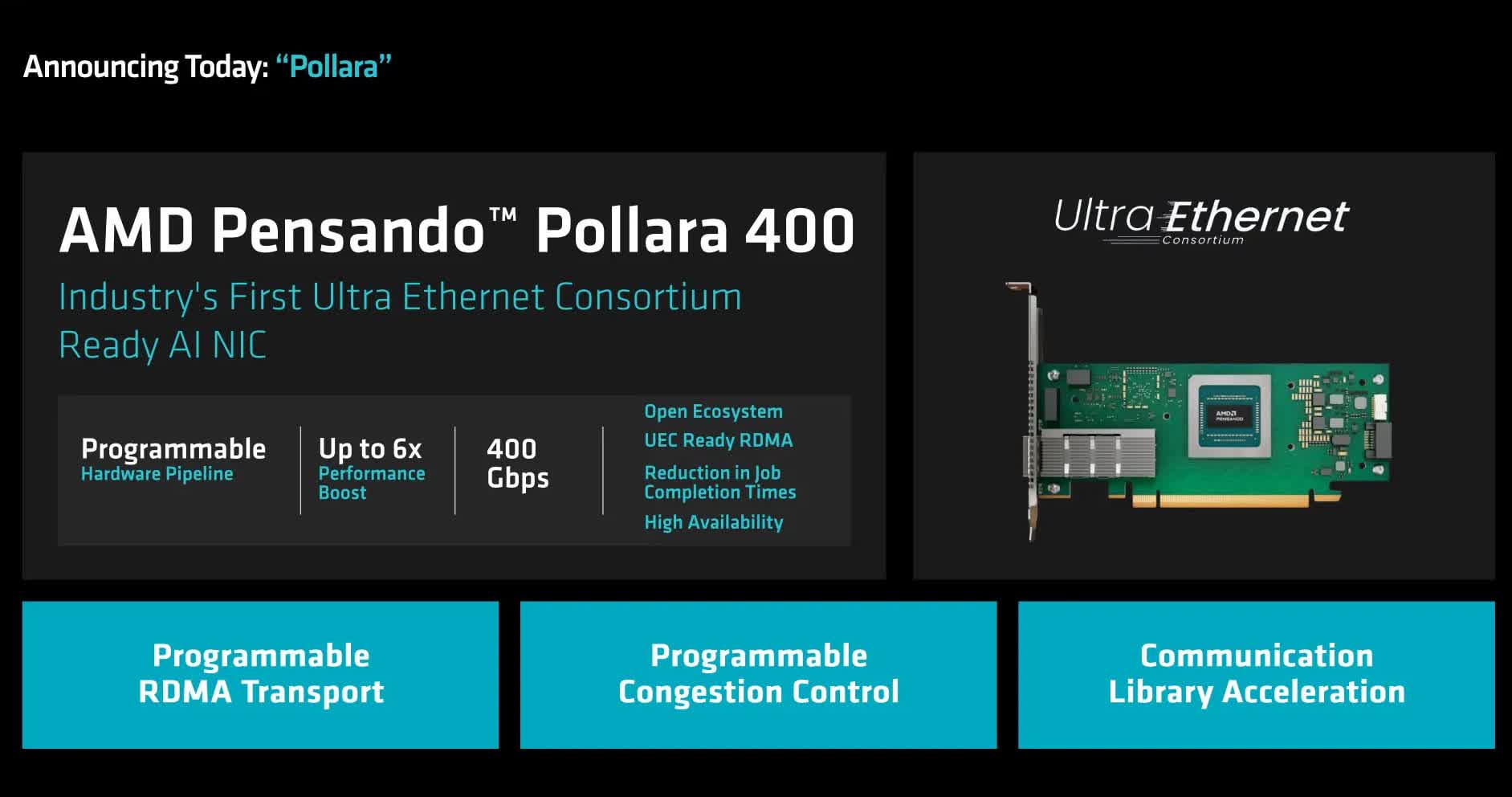

AMD’s introduction of the Pollara 400 underscores the rising importance of specialized hardware in the AI ecosystem.

The rise of generative AI and LLMs has exposed critical shortcomings in conventional Ethernet networks.

Traditional Ethernet, originally designed for general-purpose computing, has struggled to meet these specialized needs.

And yet Ethernet remains the preferred choice for AI cluster networking due to its widespread adoption.

However, the growing gap between Ethernet’s capabilities and the demands of AI workloads has become increasingly evident.

Additionally, the Pollara 400 accelerates communication libraries commonly used in AI workloads.

AMD enters the back end AI internet game with a UEC (UltraEthernet) NIC.

Did not expect this one, but understand the Nvidia total solutions posture has to drive this.

Will have to do some work to get underneath this.