Feynman suggested that the strange physics of quantum mechanics could be used to perform calculations.

The field ofquantum computingwas born.

In the 40-plus years since, it has become an intensive area of research in computer science.

Questions and uncertainties still remain about the best ways to reach this milestone.

Explainer:What is Quantum Computing?

What exactly is quantum computing, and how close are we to seeing them enter wide use?

Classical computers process information using combinations of"bits", their smallest units of data.

These bits have values of either 0 or 1.

Quantum computers, on the other hand, usequantum bits, or qubits.

Unlike classical bits, qubits don’t just represent 0 or 1.

Thanks to a property calledquantum superposition, qubits can be in multiple states simultaneously.

This means a qubit can be 0, 1, or both at the same time.

This is what gives quantum computers the ability to process massive amounts of data and information simultaneously.

Think about the extremely complex problem of rescheduling airline flights after a delay or an unexpected incident.

Every day thereare more than 45,000 flights, organized by over 500 airlines, connecting more than 4,000 airports.

This problem would take years to solve for a classical computer.

Qubits also have a physical propertyknown as entanglement.

This is something that, again, has no counterpart in classical computing.

Entanglement allows quantum computers to solve certain problems exponentially faster than traditional computers can.

The short answer is no, at least not in the foreseeable future.

However, they are not suited to every bang out of task.

This makes them extremely predictable, robust andless prone to errorsthan quantum machines.

There are at least two reasons for that.

The first one is practical.

Building a quantum computer that can run reliable calculations is extremely difficult.

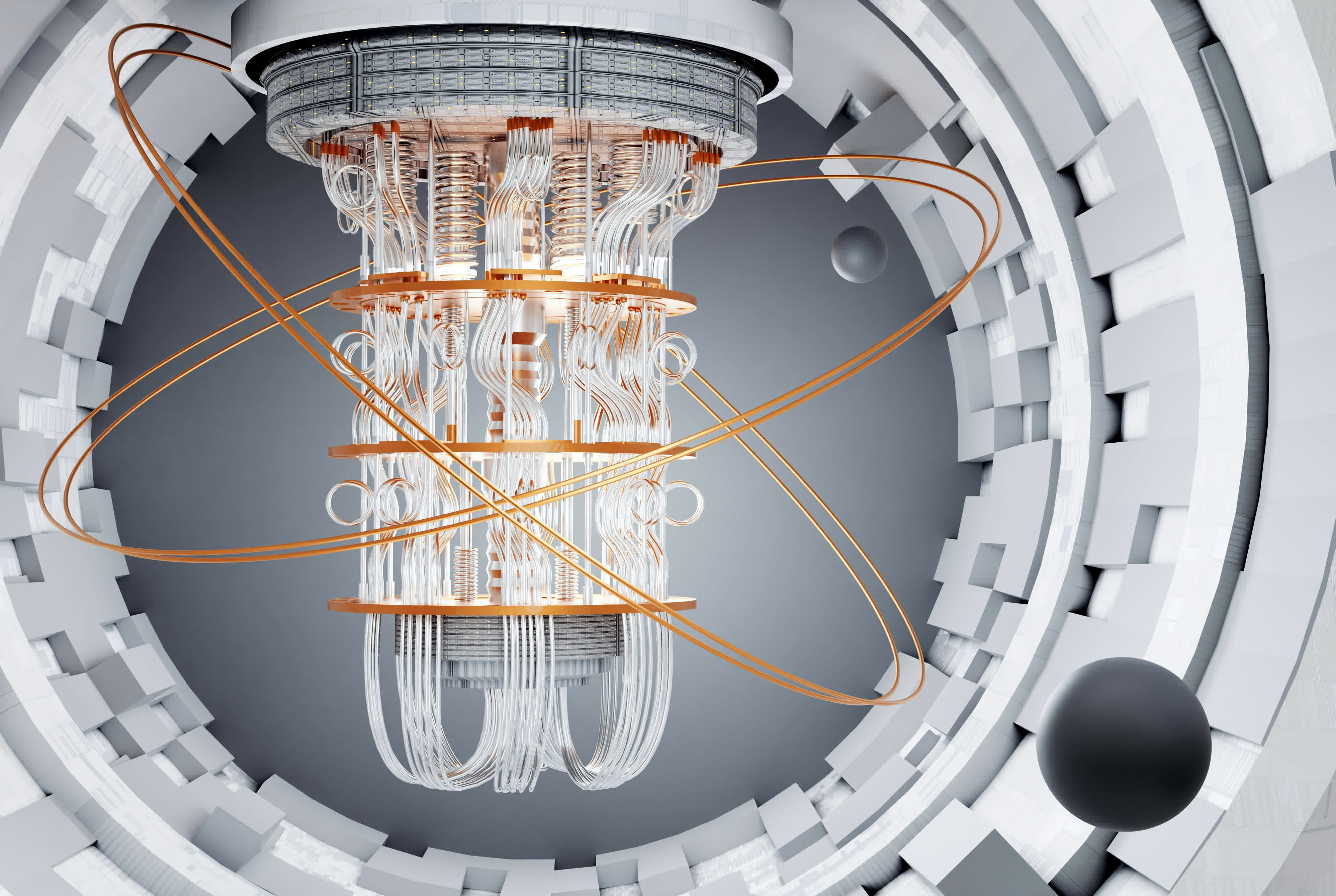

Meet Willow: Our state-of-the-art quantum chip.

Dive in https://t.co/Lr1vkZk1QTpic.twitter.com/8VkiXQ694u

The second reason lies in the inherent uncertainty in dealing with qubits.

Physicists therefore describe qubits and their calculations in terms of probabilities.

To address this uncertainty, quantum algorithms are typically run multiple times.

The results are then analyzed statistically to determine the most likely solution.

This approach allows researchers to extract meaningful information from the inherently probabilistic quantum computations.

They are all working on making quantum computers more reliable, scalable and accessible.

Manufacturers are increasingly prioritizing ways to correct the errors that quantum computers are prone to.

Google’s latest quantum chip, Willow, recentlydemonstratedremarkable progress in this area.

The more qubits Google used in Willow, themore it reduced the errors.

The probabilistic nature of these machines represents a fundamental difference between quantum and classical computing.

It is what makes them fragile and hard to develop and scale.